September 6th, 2010

I am currently part of a small but dynamic development team at Common Ground Publishing. We are building an application suite in Ruby on Rails to replace a single monolithic legacy application. Most of my efforts at Common Ground have centered on building their online authoring and publishing environment with active test deployments in Illinois schools. Since starting with Common Ground, I have been involved in every stage of their application lifecycle: gathering user requirements, UI design, database and data model design, implementation, migrating legacy data, testing and production deployment into schools. Key to this process has been its cyclical nature – the developers and designers along with stakeholders attend test-bed deployments to better understand user requirements and gather usability insights. These lessons learned are fed back into our agile development cycle as prioritized backlog items so that we iterate dynamically toward an optimal solution.

For more information on Common Ground software and activities, see their website:

http://commongroundpublishing.com/software/

Tags: javascript, jquery, legacy, MVC, postgres, ruby on rails, UI, web, workflow

Posted in Projects | No Comments »

June 1st, 2010

Salvatore Giovanni Martirano, internationally acclaimed American composer and Professor Emeritus at the University of Illinois, was my father. In 2008 my family dedicated his collected artistic works and papers to Creative Commons and began transitioning their care to the Center for American Music at the University of Illinois Archives. This process prompted a project to digitize this collection into a flexible, searchable, on-line digital archive. In managing the various phases of this project, it has been my pleasure to work with image and video archivist Matthew Benkert, audio archivist Ken Beck and copyright lawyer Michael Antoline. This 20,000+ item collection encompasses audio-tapes, films, videos, photos, documents, musical scores, letters, computer files and computer code. These items were transferred from a variety of analog formats into hi-resolution digital representations, quality control measurements applied, classified and meta-data tagged and stored in a searchable database. A flexible web-based interface is now under production to serve up these digital objects. This web-interface and database system has been designed to consume other properly organized and meta-data tagged digital collections which have emerged as candidates during this process.

Salvatore Giovanni Martirano, internationally acclaimed American composer and Professor Emeritus at the University of Illinois, was my father. In 2008 my family dedicated his collected artistic works and papers to Creative Commons and began transitioning their care to the Center for American Music at the University of Illinois Archives. This process prompted a project to digitize this collection into a flexible, searchable, on-line digital archive. In managing the various phases of this project, it has been my pleasure to work with image and video archivist Matthew Benkert, audio archivist Ken Beck and copyright lawyer Michael Antoline. This 20,000+ item collection encompasses audio-tapes, films, videos, photos, documents, musical scores, letters, computer files and computer code. These items were transferred from a variety of analog formats into hi-resolution digital representations, quality control measurements applied, classified and meta-data tagged and stored in a searchable database. A flexible web-based interface is now under production to serve up these digital objects. This web-interface and database system has been designed to consume other properly organized and meta-data tagged digital collections which have emerged as candidates during this process.

Tags: archive, javascript, jquery, mysql, ruby on rails, UI, web

Posted in Projects | No Comments »

June 1st, 2010

I hi-light this project because it demonstrates my ability to quickly grok a large unfamiliar codebase even with little documentation and to make meaningful modifications and contributions to that code. In this case I wrote a Java based plug-in for an open source Business Intelligence Suite by Pentaho Corporation. Grokking the internals of this powerful system was non-trivial but was aided by my experience as the designer of D2K, another data-flow RAD environment for data-integration and data-mining.

I hi-light this project because it demonstrates my ability to quickly grok a large unfamiliar codebase even with little documentation and to make meaningful modifications and contributions to that code. In this case I wrote a Java based plug-in for an open source Business Intelligence Suite by Pentaho Corporation. Grokking the internals of this powerful system was non-trivial but was aided by my experience as the designer of D2K, another data-flow RAD environment for data-integration and data-mining.

Tags: datamining, java, linux, mysql, web, workflow

Posted in Projects | No Comments »

May 31st, 2010

This multi-layered communications infrastructure has been chiefly utilized for its real-time multi-channel media processing and transport layers. The architecture of the system is multi-pier, trading off on the benefits of UDP, TCP and RTP/RTCP protocols for a very powerful media transport framework. The transport layer abstracts RTP, UDP and TCP transport mechanisms to simplify application integration. The application layer allows multi-channel real-time media processing applications to be built by linking together modules into graph structures (The application layer is similar to D2K in this respect). This project was the result of various lines of development while at NCSA beginning with my remote augmented reality work with Motorola and continuing with my work on collaborative video avatars embedded in the virtual reality space of Virtual Director.

Tags: c++, multi-threading, networking, video, workflow

Posted in Projects | No Comments »

May 31st, 2010

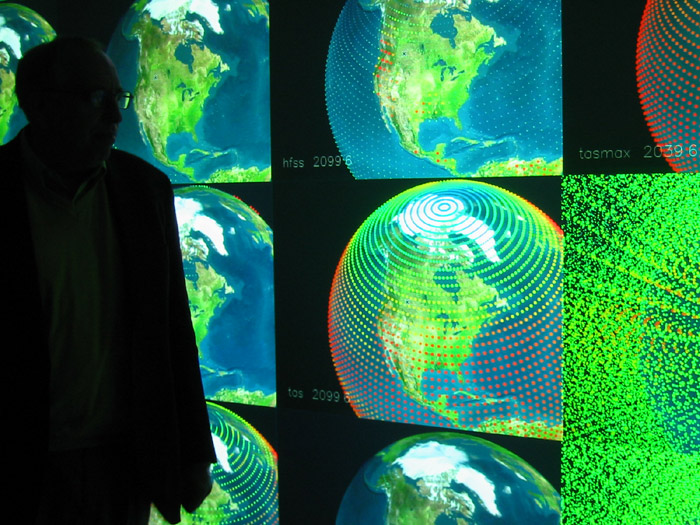

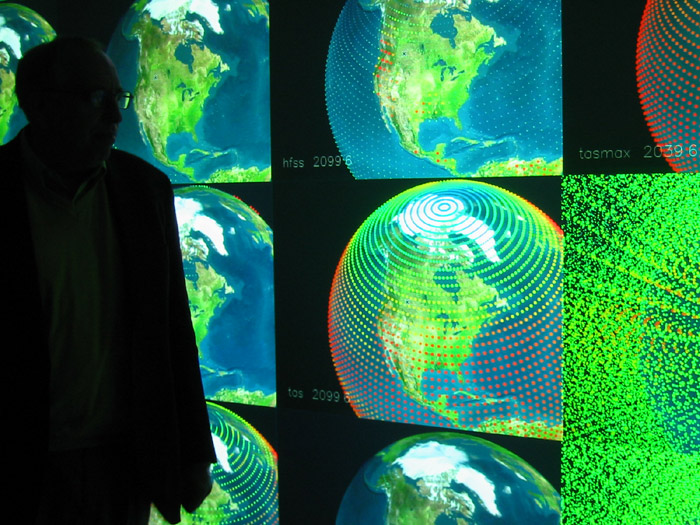

Visualizing the Global | Computer Modeling, Ecology, Politics

I Organized this cross disciplinary seminar at UIUC. I also developed a couple of demos including a 20-node tiled visualization of various layers output from the Parallel Climate Model AND an interactive visualization of global energy consumption from 1965-2002 for 73 countries driven by the data provided by the BP Statistical Review of World Energy. The Parallel Climate Model visualization involved a distributed python backend which consumed, processed and loaded model data to a projected display cluster. To accomplish the data-visualization and navigation on 20 parallel display nodes, I utilized Partiview, an open-source, C++ based, interactive data visualization tool written by Stuart Levy.

Tags: c++, climate change, parallel computing, python, visualization

Posted in Projects | No Comments »

May 31st, 2010

The Universe: Distributed Virtual Collaboration and Visualization, a Collaboration with Stephen Hawkings lab for the International Grid Conference in Amsterdam

I was project manager for our groups efforts at this conference. We facilitated a collaboration between Stephen Hawkings lab in Cambridge, England and Mike Norman in San Diego, via the International Grid (iGrid) conference in Amsterdam. For this we first traveled to Cambridge and setup Stephen Hawkings lab with our Virtual Director remote virtual collaboration software and we had the honor there of meeting with Stephen Hawking, the beginning of a long relationship of collaborations on big science visualization projects.

I was project manager for our groups efforts at this conference. We facilitated a collaboration between Stephen Hawkings lab in Cambridge, England and Mike Norman in San Diego, via the International Grid (iGrid) conference in Amsterdam. For this we first traveled to Cambridge and setup Stephen Hawkings lab with our Virtual Director remote virtual collaboration software and we had the honor there of meeting with Stephen Hawking, the beginning of a long relationship of collaborations on big science visualization projects.

From the StarLight website: “Virtual Director and related technologies enable multiple users to remotely collaborate in a shared, astrophysical virtual world. Users are able to collaborate via video, audio and 3D avatar representations, and through discrete interactions with the data. Multiple channels of dynamically scalable video allow the clients to trade off between video processing and scene rendering as appropriate. At iGrid, astrophysical scenes are rendered using several techniques, including an experimental renderer that creates time-series volume animation using pre-sorted points and billboard splats, allowing visualizations of very-large datasets in real-time.” (http://www.startap.net/starlight/igrid2002/universe02.html)

And from one observer as reported in Calit2 news: “The Universe was the entry in the grand slam broadband sweepstakes from the National Center for Supercomputing Applications (NCSA) at University of Illinois at Urbana-Champaign, in collaboration with UCSD, USC, and the Stephen Hawking Laboratory at Cambridge University. It was my favorite — and clearly the biggest crowd pleaser. We wore 3-D glasses and viewed a latency-free video conferencing window that moved around showing video textures on floating cubes as areas of interest.”

Tags: c++, networking, visualization

Posted in Projects | No Comments »

May 31st, 2010

Touring with the Understanding Race and Human Variation exhibition.

Touring with the Understanding Race and Human Variation exhibition.

Co-produced by myself, Brent McDonald and UIUC Professor of Anthropology, Brenda Farnell, Not a Mascot is a public service announcement which presents a diverse set of American Indian voices from the Chicago American Indian Movement (AIM). This video will be part of a huge exhibition developed by the American Anthropological Association (AAA). – “open to the public since January 2007, the 5,000 square-foot museum exhibit was developed by the Science Museum of Minnesota. The exhibit includes interactive experiences for visitors to learn the history of race, the role of science in that history and the subtle and obtrusive expressions of race and racism in institutions and daily lives. The exhibit is touring for at least six years and is expected to reach some three million visitors in cities across the US.”

Tags: video

Posted in Projects | No Comments »

May 31st, 2010

“IntelliBadgeâ„¢, an NCSA experimental technology, is an academic experiment that uses smart technology to track participants at major public events. IntelliBadgeâ„¢ was first publicly showcased at SC-2002, the world’s premier supercomputing conference, in the Baltimore Convention Center. This was the first time that radio frequency tracking technology, database management/mining, real-time information visualizations and interactive web/kiosk application technologies fused into operational integrated system and production at a major public conference.”

“IntelliBadgeâ„¢, an NCSA experimental technology, is an academic experiment that uses smart technology to track participants at major public events. IntelliBadgeâ„¢ was first publicly showcased at SC-2002, the world’s premier supercomputing conference, in the Baltimore Convention Center. This was the first time that radio frequency tracking technology, database management/mining, real-time information visualizations and interactive web/kiosk application technologies fused into operational integrated system and production at a major public conference.”

I was involved in this project at myriad levels, including setup and administration of linux machines, integration of my collaborative video streaming software, multi-threading the application, mirroring code for this P2P architecture, and developing post-conference data analytics with custom Java-based software.

Tags: c++, datamining, java, linux, multi-threading, networking, rfid, visualization

Posted in Projects | No Comments »

May 31st, 2010

I was the orginal architect and author of this 100% Java data-mining system. Once known as D2K (Data to Knowledge), this system was most fundamentally a model for designing custom data-mining solutions. It was as well a rapid application development environment for the development of those solutions with a powerful run-time environment. I wrote the original prototype for D2K while working for the Automated Learning Group at NCSA. Tom Redman (from the Mosaic project) would soon join the team to create the interface and RAD component of the system. David Tcheng’s ideas were the intellectual foundations of many of the algorithms implemented within the system. I did 2 more major rewrites of the infrastructure during my time in the ALG during which time this small research group grew from 3 to well more than a dozen people increasingly focused on some aspect of D2K. D2K quickly turned into a flagship effort of NCSA and certainly of ALG and subsequently become the central tool for a startup company specializing in real-time analytics: River Glass. There is now a project underway to develop a next generation evolution of this software, a semantic-driven system called Meandre of which I am only an interested observer.

I was the orginal architect and author of this 100% Java data-mining system. Once known as D2K (Data to Knowledge), this system was most fundamentally a model for designing custom data-mining solutions. It was as well a rapid application development environment for the development of those solutions with a powerful run-time environment. I wrote the original prototype for D2K while working for the Automated Learning Group at NCSA. Tom Redman (from the Mosaic project) would soon join the team to create the interface and RAD component of the system. David Tcheng’s ideas were the intellectual foundations of many of the algorithms implemented within the system. I did 2 more major rewrites of the infrastructure during my time in the ALG during which time this small research group grew from 3 to well more than a dozen people increasingly focused on some aspect of D2K. D2K quickly turned into a flagship effort of NCSA and certainly of ALG and subsequently become the central tool for a startup company specializing in real-time analytics: River Glass. There is now a project underway to develop a next generation evolution of this software, a semantic-driven system called Meandre of which I am only an interested observer.

- An article about some work done in D2K.

Tags: datamining, java, multi-threading, mysql, parallel computing, web, workflow

Posted in Projects | No Comments »

May 31st, 2010

Sound familiar? This highly experimental collaboration with Motorola Labs preceded by nearly a decade the recent smart-phone release by the same name. In 2000-2001, a server 100s of miles away at Motorola Labs would augment in real-time the view of a user who wore head-mounted-display and head-mounted mini-camera. The users position and orientation in the room was inferred remotely from markers in the environment and an updated 3D virtual scene was generated, returned and composited into his view. In 2001 this roundtrip transport and processing benchmarked at less than 1/20th of a second. In other words, at a time when the average web page took 8 seconds to load (http://en.wikipedia.org/wiki/Network_performance), our app was remotely augmenting reality with a latency barely perceptible to humans. This project pushed the existing technologies to the limit: windows, networking, compression, multi-threaded media transport and processing. I generally prefer a Unix-like environment for software development. However, when hardware driver support and availability demanded that Windows be the target OS for our Augmented Reality platform, I made the transition without going into Linux withdrawal.

Tags: c++, networking, visualization, windows

Posted in Projects | No Comments »