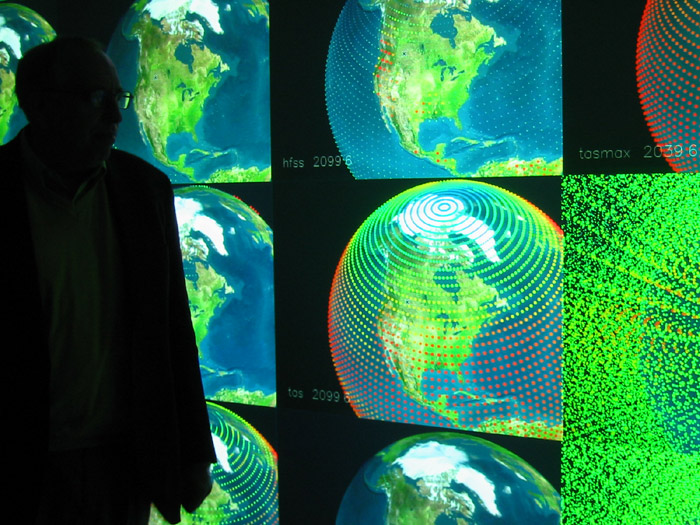

The Universe: Distributed Virtual Collaboration and Visualization, a Collaboration with Stephen Hawkings lab for the International Grid Conference in Amsterdam

I was project manager for our groups efforts at this conference. We facilitated a collaboration between Stephen Hawkings lab in Cambridge, England and Mike Norman in San Diego, via the International Grid (iGrid) conference in Amsterdam. For this we first traveled to Cambridge and setup Stephen Hawkings lab with our Virtual Director remote virtual collaboration software and we had the honor there of meeting with Stephen Hawking, the beginning of a long relationship of collaborations on big science visualization projects.

I was project manager for our groups efforts at this conference. We facilitated a collaboration between Stephen Hawkings lab in Cambridge, England and Mike Norman in San Diego, via the International Grid (iGrid) conference in Amsterdam. For this we first traveled to Cambridge and setup Stephen Hawkings lab with our Virtual Director remote virtual collaboration software and we had the honor there of meeting with Stephen Hawking, the beginning of a long relationship of collaborations on big science visualization projects.

From the StarLight website: “Virtual Director and related technologies enable multiple users to remotely collaborate in a shared, astrophysical virtual world. Users are able to collaborate via video, audio and 3D avatar representations, and through discrete interactions with the data. Multiple channels of dynamically scalable video allow the clients to trade off between video processing and scene rendering as appropriate. At iGrid, astrophysical scenes are rendered using several techniques, including an experimental renderer that creates time-series volume animation using pre-sorted points and billboard splats, allowing visualizations of very-large datasets in real-time.” (http://www.startap.net/starlight/igrid2002/universe02.html)

And from one observer as reported in Calit2 news: “The Universe was the entry in the grand slam broadband sweepstakes from the National Center for Supercomputing Applications (NCSA) at University of Illinois at Urbana-Champaign, in collaboration with UCSD, USC, and the Stephen Hawking Laboratory at Cambridge University. It was my favorite — and clearly the biggest crowd pleaser. We wore 3-D glasses and viewed a latency-free video conferencing window that moved around showing video textures on floating cubes as areas of interest.”